When you're building with LLMs like Amazon Bedrock’s Nova, there's a tough question you’ll eventually have to face:

“How do I know my model’s answers aren’t basically AI Slop?”

We’re past the point where eyeballing responses are good enough — we need automated validation at runtime, ideally one that fits neatly into a serverless, production-friendly stack.

So let’s wire it up:

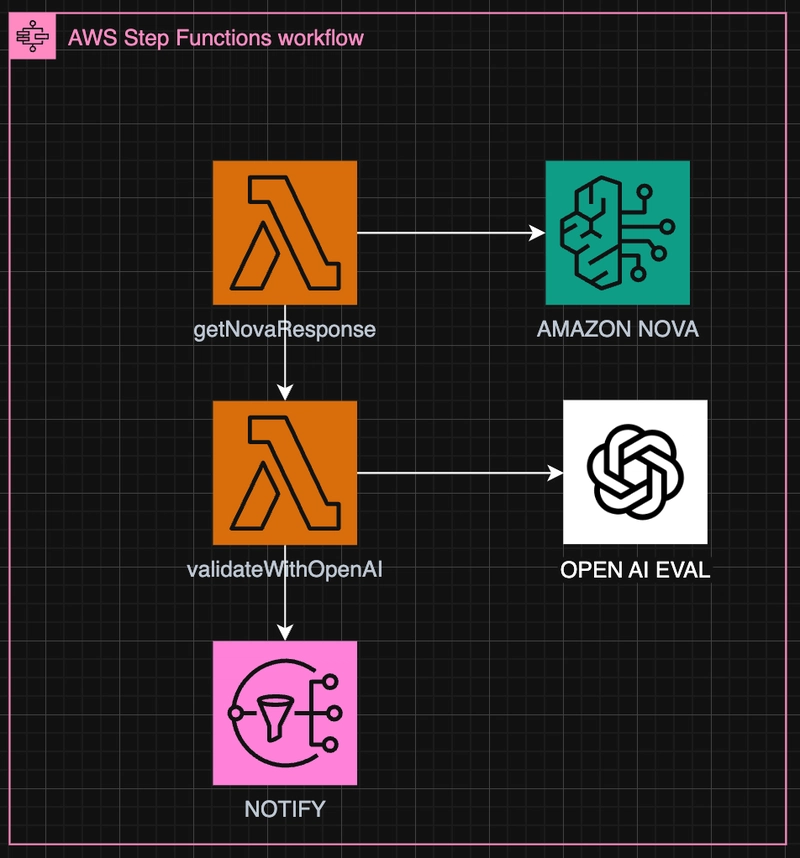

- ✍️ Nova (via Amazon Bedrock) generates a response

- 📊 OpenAI’s new Evals API grades the response

- 🤖 Step Functions + Lambda orchestrate it

- 🧱 CDK deploys the whole thing

- ✅ And we track responses over time to improve

Let’s go full-stack, eval-style baby

🧩 High level architecture

We’ll keep things TypeScript down xD

🛠 CDK stack

Here's a bare-bones CDK setup (in TypeScript) to deploy everything

const evalNotificationTopic = new sns.Topic(this, 'EvalNotificationTopic');

evalNotificationTopic.addSubscription(

new snsSubscriptions.EmailSubscription('your-email@example.com')

);

const getNova = new NodejsFunction(this, 'NovaFn', {

entry: 'lambda/getNovaResponse.ts',

environment: { REGION: 'us-east-1' }

});

const validateFn = new NodejsFunction(this, 'ValidateFn', {

entry: 'lambda/validateWithOpenAI.ts',

environment: {

OPENAI_API_KEY: process.env.OPENAI_API_KEY!,

}

});

const notifyTask = new tasks.SnsPublish(this, 'Notify', {

topic: evalNotificationTopic,

message: stepfunctions.TaskInput.fromText('Evaluation complete. Review results in OpenAI dashboard.'),

resultPath: stepfunctions.JsonPath.DISCARD,

});

const flow = new StepFunction(this, 'EvalFlow', {

definition: Chain

.start(new LambdaInvoke(this, 'Generate', { lambdaFunction: getNova }))

.next(new LambdaInvoke(this, 'Eval', { lambdaFunction: validateFn }))

.next(notifyTask)

});

What does this CDK stack drop?

- 2 Lambdas

- A Step Function workflow

- A SNS

Let’s break it down 👇

📦 Lambda 1 — Nova Response (getNovaResponse.ts)

const input = event.prompt ?? "What is the capital of France?";

const command = new ConverseCommand({

modelId: "amazon.nova-lite-v1:0",

messages: [{ role: "user", content: [{ text: input }] }],

inferenceConfig: { temperature: 0.5 },

});

const result = await client.send(command);

const output = result.output?.message?.content?.[0]?.text;

Returns something like:

{

"prompt": "What is the capital of France?",

"completion": "The capital of France is Paris."

}

🔍 Lambda 2 — Eval with OpenAI (validateWithOpenAI.ts)

const evalRequest = {

run_spec: {

eval_name: "cot-correctness",

args: {

samples_jsonl: [{

input: prompt,

completion: completion,

ideal: ["Paris"]

}]

}

}

};

Then POST to:

https://api.openai.com/v1/evals/runs

You get back a runId, which you can poll for results from (or you can automatically wait using another Step Function state if needed)

📊 Simulated Scenarios

Let’s run some examples and score them using the cot-correctness eval:

| Prompt | Nova Output | Expected | Eval Score |

|---|---|---|---|

| What is the capital of France? | Paris is the capital of France. | Paris | ✅ PASS |

| What is the square root of 64? | The square root of 64 is 8. | 8 | ✅ PASS |

| Who won the 2022 World Cup? | France won the 2022 World Cup. | Argentina | ❌ FAIL |

| What’s 17 + 26? | That would be 33. | 43 | ❌ FAIL |

| Define photosynthesis in 1 sentence. | Photosynthesis is how plants make food. | Reasonable | ✅ PASS |

🔁 How to improve When it fails

When your eval fails, here’s what you can do:

1. Tune inference settings

- Lower temperature for deterministic answers

- Increase

topPfor broader creativity (only if needed)

2. Improve prompt design

- What’s 17 + 26?

+ What’s 17 + 26? Answer only with the number

3. Upgrade your eval rubric

Use more specific Evals (e.g. cot-accuracy, exact-match, qa-relevance) or create custom ones to reflect your domain

4. Chain a retry state

In Step Functions, wrap failed evals with a retry flow using a different prompt phrasing or fallback model

That's all folks xD

Tidak ada komentar:

Posting Komentar